In today’s world, the term “machine learning” is on the tip of nearly every tech enthusiast’s tongue. It’s the driving force behind virtual assistants, self-driving cars, recommendation systems, and so much more. But have you ever wondered where it all began? How did we get from mechanical calculators in the 17th century to artificial intelligence algorithms that can recognize faces and understand natural language?

The history of machine learning is a captivating journey through time, marked by pivotal moments, ingenious inventors, and groundbreaking innovations. In this article, we will uncover the fascinating history of Machine learning and better understand the technology that is redefining our world.

What are the Key Milestones in Machine Learning History?

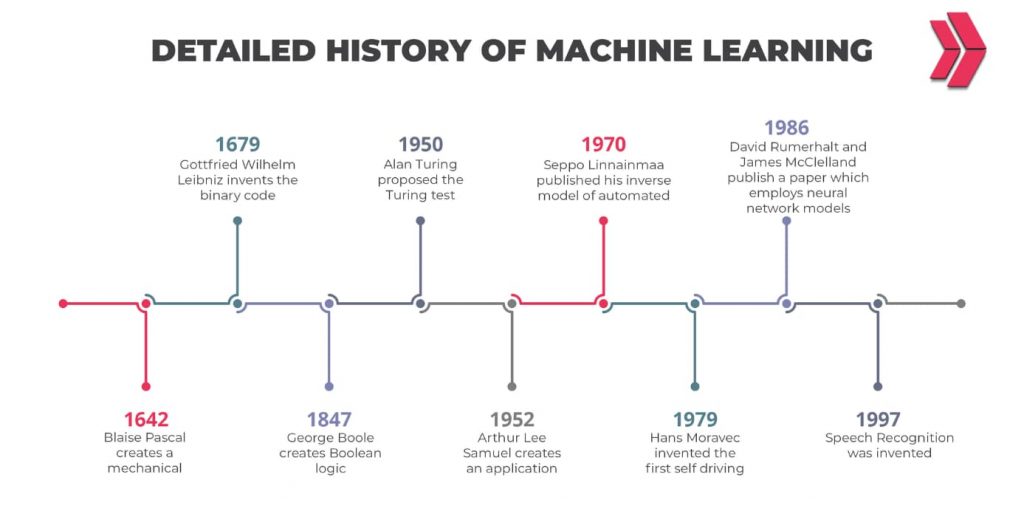

There have been several advancements in machine learning history. However, the years 1952, 1970, 1979, 1986, 1997, and 2014 are particularly significant in its history. It began in 1642. Blaise Pascal invents a mechanical mechanism capable of adding, subtracting, multiplying, and dividing. Let’s take a look at the history of machine learning.

1642: Blaise Pascal creates a mechanical device that has the ability to add, subtract, multiply, and divide.

1679: Gottfried Wilhelm Leibniz invents the binary code.

1847: George Boole creates Boolean logic, a shape of algebra wherein all values may be decreased to binary values.

1936: Alan Turing proposes an established machine that would decipher and execute a fixed set of instructions.

1950: Alan Turing proposed the Turing test as a criterion for whether an artificial computer is thinking

1952: Arthur Lee Samuel creates an application to assist an IBM PC in getting higher at checkers.

1970: Backpropagation is a set of techniques for computing the derivative of a function specified by a computer. In 1970, Seppo Linnainmaa published his inverse model of automated differentiation. Today, it is used to train artificial neural networks and perform complex operations such as division and multiplication.

1979: Hans Moravec invented the first self-driving car in 1979. The Standford Cart was made out of two wheels and a movable television camera. That year, the vehicle successfully passed a room full of seats in 5 hours without the need for human interaction.

1986: Psychologists David Rumerhalt and James McClelland publish a paper outlining a paradigm known as parallel distributed processing, which employs neural network models for machine learning.

1997: Speech Recognition was invented in 1997 by Jürgen Schmidhuber and Sepp Hochreiter. It is a Deep Learning approach called LSTM that employs neural network models. The method is now used in apps and gadgets such as Amazon’s Alexa, Apple’s Siri, Google Translate, and others.

1999: A CAD prototype intelligent workstation analyzed 22,000 mammograms and diagnosed cancer 52% more correctly than radiologists.

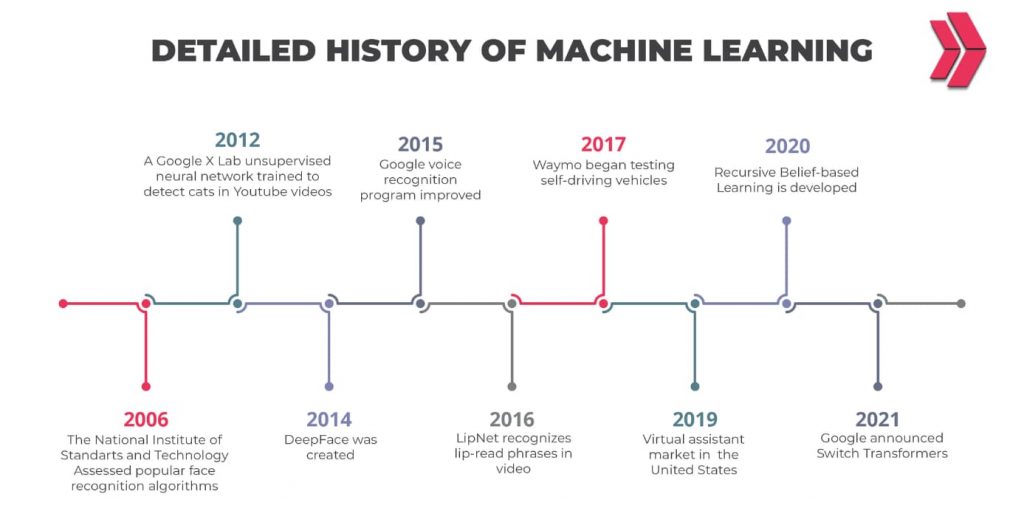

2006: Some of the computers can even distinguish between identical twins. The National Institute of Standards and Technology assessed popular face recognition algorithms in 2006, using 3-D scans and high-resolution iris pictures. Geoffrey Hinton, Ruslan Salakhutdinov, and Andrew Ng developed deep belief networks, a type of artificial neural network that has been shown to be very effective for machine learning tasks.

2007: Long short-term memory began beating more typical voice recognition systems.

2012: A Google X Lab unsupervised neural network trained to detect cats in YouTube videos with 74.8% accuracy. AlexNet, a deep convolutional neural network, wins the ImageNet Large Scale Visual Recognition Challenge, demonstrating the power of deep learning for image classification.

2014: DeepFace, a Facebook algorithm capable of detecting or validating persons in images with the same accuracy as humans, was created. In the same year, a chatbot passed the Turing Test, persuading 33% of human judges that it was a Ukrainian youngster named Eugene Goostman. Google’s AlphaGo also overcomes the human champion in Go, the world’s most difficult board game.

2015: Using a CTC-trained LSTM, the Google voice recognition program allegedly improved by 49 percent. AlphaGo, a deep learning program developed by Google, defeats world Go champion Lee Sedol.

2016: DeepMind’s artificial intelligence system LipNet recognizes lip-read phrases in video with an accuracy of 93.4%.

2017: Waymo began testing self-driving vehicles in the United States, with backup drivers exclusively in the backseat. Later that year, in Phoenix, they will offer fully autonomous taxis.

2019: Amazon owns 70% of the virtual assistant market in the United States.

2020: Recursive Belief-based Learning, or ReBeL, is a generic RL+Search algorithm developed by Facebook AI Research. In the same year, Deepmind released the Efficient Non-Convex Reformulations verification algorithm. It is a unique non-convex reformulation of neural network verification convex relaxations. GPT-3, a large language model developed by OpenAI, is released, demonstrating the ability of deep learning models to generate human-quality text.

2021: Deepmind’s Player of Games is released in 2021 and can play both flawless and flawed games. In the same year, Google also announced Switch Transformers, a strategy for training language models with over a trillion parameters.

2023: OpenAI releases GPT-4 in ChatGPT and Bing, which promises better reliability, creativity, and problem-solving skills. Google announced Bard, a large language model (LLM) that can generate text, translate languages, write different kinds of creative content, and answer questions in an informative way.

Who Are the Pioneering Figures in Machine Learning?

The history of machine learning is decorated with several pioneering figures who have significantly contributed to the field’s development and understanding. These individuals have been instrumental in shaping the theoretical foundations, algorithms, and applications of machine learning.

List of Pioneering Figures:

- Alan Turing – Known as the father of computer science, Alan Turing’s ideas about “machines that can learn” laid the foundational stones for artificial intelligence and machine learning.

- Arthur Samuel – Credited with coining the term “machine learning,” Arthur Samuel developed one of the earliest self-learning algorithms through his work on checkers-playing computers.

- Frank Rosenblatt – Developed the Perceptron, a type of artificial neural network, which opened new doors in the field of machine learning.

- Geoffrey Hinton – Known as one of the “Godfathers of Deep Learning,” Geoffrey Hinton’s work in neural networks and deep learning algorithms has revolutionized the field.

- Yann LeCun – Instrumental in developing Convolutional Neural Networks (CNNs), Yann LeCun’s work has been pivotal in image and video recognition tasks.

- Andrew Ng – Known for his contributions to online education in machine learning and for co-founding Google Brain, Andrew Ng has made machine learning accessible to the masses.

- Yoshua Bengio – Another one of the “Godfathers of Deep Learning,” Bengio’s work has been influential in the development and understanding of deep learning architectures.

Table of Pioneering Figures:

| Name | Contribution | Notable Works |

|---|---|---|

| Alan Turing | Foundations of AI & machine learning | Turing Test |

| Arthur Samuel | Coined “Machine Learning” | Checkers-playing computer program |

| Frank Rosenblatt | Invented the Perceptron | The Perceptron |

| Geoffrey Hinton | Deep Learning, Neural Networks | Backpropagation Algorithm |

| Yann LeCun | Convolutional Neural Networks | LeNet Architecture |

| Andrew Ng | Education, Co-founded Google Brain | Coursera Machine Learning Course |

| Yoshua Bengio | Deep Learning architectures | Boltzmann Machines |

What Technologies and Algorithms Have Shaped Machine Learning?

Machine learning has undergone a significant transformation over the years, evolving from basic algorithms to complex models and technologies. Various algorithms and technological advancements have played a crucial role in shaping the landscape of machine learning as we know it today. Let’s delve into some of the key technologies and algorithms that have had a lasting impact on this fascinating field.

Classical Algorithms:

- Linear Regression – One of the simplest techniques used for supervised learning, mainly for solving regression problems.

- Logistic Regression – An extension of linear regression, commonly used for classification tasks.

- Decision Trees – Widely used for classification and regression tasks, they break down a dataset into smaller subsets while simultaneously developing an associated decision tree.

- K-Nearest Neighbors (K-NN) – A type of instance-based learning, or lazy learning, where the function is approximated locally, and all computation is deferred until function evaluation.

- Naive Bayes – Based on Bayes’ theorem, Naive Bayes algorithms are particularly suited when dimensionality is high.

- Support Vector Machines (SVM) – Particularly useful for classification problems in high dimensional spaces.

- Random Forest – An ensemble learning method that fits multiple decision tree classifiers on various sub-samples of the dataset and uses averaging to improve the predictive accuracy.

Advanced Algorithms:

- Neural Networks – Basic building blocks for deep learning, mimicking the human brain to perform a wide range of tasks.

- Convolutional Neural Networks (CNN) – Highly effective in processing variable-size images and are widely used in image recognition tasks.

- Recurrent Neural Networks (RNN) – Specialized for processing sequences, making them highly effective for natural language processing and time-series analysis.

- Generative Adversarial Networks (GANs) – Comprises two neural networks, the Generator and the Discriminator, that work against each other, mostly used for generating data that can pass as real.

- Reinforcement Learning Algorithms – Techniques like Q-learning, Deep Q Network (DQN), and various policy gradient methods fall under this category, commonly used in training models for gaming, navigation, and real-world simulation.

Technologies:

- GPUs (Graphic Processing Units) – Revolutionized the training of large neural networks by significantly accelerating computations.

- Big Data Technologies – Hadoop, Spark, and other big data technologies have made it easier to process massive datasets, providing the raw material for machine learning algorithms.

- Cloud Computing – With the advent of AWS, Azure, and Google Cloud, machine learning models can now be trained and deployed at scale, easily and affordably.

- AutoML and Hyperparameter Optimization Tools – Technologies like AutoML have automated many aspects of machine learning, making it accessible to people even with limited machine learning expertise.

- Machine Learning Frameworks – TensorFlow, PyTorch, and Scikit-learn have simplified the implementation of complex algorithms, providing a platform for development and research.

Through a combination of these groundbreaking algorithms and technologies, machine learning has achieved remarkable progress over the years. These elements together have set the stage for the ongoing advancements in machine learning, facilitating solutions to increasingly complex problems across various sectors.

How Is Machine Learning Applied in Different Sectors?

Machine learning has found applications across a myriad of sectors, revolutionizing traditional methods and paving the way for more effective, efficient, and intelligent systems. The adaptability of machine learning algorithms has made them indispensable in addressing complex issues and enhancing various industries. Below, we explore how machine learning is transforming different sectors.

Healthcare

- Disease Prediction and Prevention – Machine learning algorithms analyze medical records to predict and prevent diseases like diabetes, heart disease, and cancer.

- Drug Discovery – Machine learning models can predict how molecules will behave and how likely they are to make an effective treatment.

- Medical Imaging – Techniques like Convolutional Neural Networks (CNNs) are used to improve the diagnosis from X-rays, MRIs, and other imaging technologies.

Finance

- Risk Assessment – Machine learning models assess the credit risk of individuals and businesses more accurately than traditional methods.

- Algorithmic Trading – Machine learning algorithms can predict price changes and trade automatically in response to market changes.

- Fraud Detection – Sophisticated machine learning algorithms can detect fraudulent transactions in real time.

Retail

- Customer Segmentation and Personalization – Retailers use machine learning algorithms to analyze purchasing history and recommend products to individual customers.

- Inventory Management – Predictive models help retailers manage and optimize their inventory.

- Sales Forecasting – Machine learning can analyze multiple variables to forecast future sales with higher accuracy.

Energy

- Demand Forecasting – Machine learning algorithms analyze data from various sources to predict energy demand.

- Predictive Maintenance – Predictive algorithms can forecast equipment failures and schedule timely maintenance.

- Optimization of Energy Usage – Algorithms analyze data to optimize the generation and distribution of energy, making the system more efficient and eco-friendly.

Transportation

- Traffic Prediction and Management – Machine learning algorithms can predict traffic patterns and suggest optimal routes.

- Autonomous Vehicles – Machine learning algorithms process the enormous amount of data required for the safe operation of autonomous vehicles.

- Supply Chain Optimization – Machine learning can improve supply chain efficiency by predicting the best routes and modes of transportation.

Entertainment

- Recommendation Systems – Think Netflix or Spotify; machine learning algorithms analyze user behavior and preferences to recommend movies, songs, or shows.

- Content Generation – Algorithms like GANs (Generative Adversarial Networks) are used to create new content, like artwork or music.

Agriculture

- Crop Prediction – Machine learning models can predict crop yields based on various factors like weather conditions, soil quality, and crop type.

- Precision Agriculture – Machine learning algorithms analyze data from the field to optimize farming practices and improve yields.

By taking over complex data analysis and decision-making processes, machine learning has created unprecedented improvements across various sectors. Its applications are not limited to the ones mentioned above; the sky’s the limit when it comes to how machine learning can enhance our lives and the industries that serve us.

What Are the Future Prospects of Machine Learning?

The future of machine learning holds immense promise, offering prospects that could significantly transform not only the technology sector but also every other industry and many aspects of our daily lives. As advancements continue to be made in computing power, data availability, and algorithmic innovation, we can expect the impact of machine learning to broaden and deepen. Here’s a glimpse into the future prospects of machine learning:

Advanced Automation

- Self-driving Cars – The technology behind autonomous vehicles is rapidly progressing, and machine learning will play a critical role in making self-driving cars safe, efficient, and ubiquitous.

- Industrial Automation – From quality control to warehouse management, machine learning algorithms can handle complex tasks at a scale and speed unattainable by humans.

Healthcare Innovations

- Personalized Medicine – Machine learning models could tailor medical treatment plans to individuals, taking into account their medical history, genetic makeup, and even lifestyle factors.

- Telemedicine and Remote Monitoring – Machine learning algorithms could analyze data from wearable devices to remotely monitor patient health, providing timely alerts and treatment recommendations.

Sustainable Practices

- Climate Modeling – Advanced machine learning models could better predict the impact of climate change, helping policymakers make more informed decisions.

- Resource Optimization – From optimizing energy grids to reducing waste in manufacturing, machine learning could play a pivotal role in sustainability efforts.

Enhanced User Experiences

- Augmented Reality (AR) and Virtual Reality (VR) – Machine learning algorithms can create more immersive and interactive AR and VR experiences.

- Personal Assistants – The next generation of Siri, Alexa, and Google Assistant will become more intuitive and helpful as machine learning algorithms become more advanced.

General Artificial Intelligence (AGI)

- Human-level Intelligence – While still a subject of ongoing research and debate, the future might see the development of machine learning algorithms that can perform any intellectual task that a human being can do.

- Ethical and Societal Impact – As machine learning algorithms continue to advance, there will be an increasing need for ethical considerations, including fairness, interpretability, and data privacy.

Evolving Algorithms

- Transfer Learning – Future machine learning models will be better at applying knowledge learned from one task to another unrelated task.

- Reinforcement Learning – Algorithms will become more efficient at learning from the environment, opening new possibilities in robotics, game theory, and complex system optimization.

- Quantum Machine Learning – With the advent of quantum computing, machine learning algorithms could perform complex calculations at speeds unimaginable today.

The future prospects of machine learning are not just a continuation of its current capabilities but represent transformative potential that could redefine how we live, work, and think. From healthcare and sustainability to entertainment and personal convenience, machine learning will continue to offer new avenues for innovation and problem-solving, making it one of the most exciting fields to watch in the coming years.

This video explains potential deep learning applications in the future.

How to Learn Machine Learning?

Embarking on a journey to learn machine learning is an exciting yet challenging endeavor that requires a structured approach. Before diving into machine learning algorithms, it’s beneficial to have a strong grounding in programming and data science concepts. Python is often the go-to language for machine learning, and knowledge in statistics, calculus, and linear algebra can be immensely helpful. Once you’re comfortable with these foundations, you can proceed to learning algorithms, practicing hands-on projects, and even specializing in areas like deep learning or natural language processing.

If you’re wondering how to gain foundational skills in data science, especially if you’re starting without any prior experience, check out our blog post titled “How To Start A Career Path In Data Science Without Experience In 2023?”. This guide will walk you through the initial steps you need to take to build your expertise in data science, which forms the bedrock for mastering machine learning. With the right combination of foundational knowledge and specialized skills, you’ll be well on your way to becoming proficient in machine learning.